In the current cyber-age, everything is conveniently hi-tech and wireless. Now, what would happen if these computers and artificial programs (GPS, Siri) could think by themselves and be completely autonomous? The concept of singularity or strong artificial intelligence (A.I.) is when computers or machines will be able to think and operate at an advanced level of intelligence. A level that would be theoretically higher than even the brightest humans. Advocates for the creation of singularity include inventor and futurist Ray Kurzweil, who has been very outspoken about his beliefs. Though singularity is still a ways away, would the potential benefits of strong A.I. outweigh the potential risks?

Should We Be Worried About Artificial Intelligence?

Elon Musk, co-founder of Tesla motors, recently announced his hesitation with the dubious possibility of super intelligent machines. Musk believes that if A.I. becomes too advanced it could be dangerous and pose a threat to the human race. He recently tweeted about his feelings on this subject, “Hope we’re not just the biological boot loader for digital super intelligence. Unfortunately, that is increasingly probable.”

Musk is not alone in his reservations about artificial intelligence. James Barrat, the author of Our Final Invention: Artificial Intelligence and the End of the Human Era, shares Musk’s sentiments. He explains these fears to Smithsonian.com, “To succeed they’ll seek and expend resources, be it energy or money. They’ll seek to avoid the failure modes, like being switched off or unplugged. In short they’ll develop drives, including self-protection and resource acquisition-drives much like our own. They won’t hesitate to beg, borrow, steal and worse to get what they need.” Barrat goes on to say that the Department of Defense is pushing for the creation of war drones while still being opposed by people who aren’t comfortable with being protected by super intelligent “killing machines.” He argues that because ethics and a moral code can’t be built into these machines they will always carry significant risk.

How Can We Handle These Risks and Prevent Future Problems?

Barrat notes that in the 1970’s, DNA researchers suspended their projects and gathered for a conference at Asilomar in Pacific Grove, California. They used this conference as a way to develop basic safety protocols for DNA research. As a direct result of this conference, regulations on DNA research were defined. This research also ensured the development of gene therapy. It could be beneficial to have another conference, this time for A.I. developers.

Who Are The Supporters And What Are The Benefits?

Despite the risks, there are many advocates for A.I. Some people believe that these advanced super-human machines could lead to huge breakthroughs in science and health. Kurzweil believes that upgrades, improvements and eventual “singularity” for computers and other machines would be quite advantageous for the human race. Possibilities for technology include computer implants that enhance our brains’ and nanobots that will bring more oxygen to our body. Earlier this year, Kurzweil stated, “It will not be us versus the machines… but rather, we will enhance our own capacity by merging with our intelligent creations.”

Roboticist, Rodney Brooks is also optimistic, insisting that these robots will be our allies rather than threats. Gary Marcus, a psychology professor at NYU, says in PBS’ Off Book that although super intelligent machines could cause potential issues, they could also help us by improving medicine, providing better surgeries and diagnosis’ and also replacing taxi drivers which would improve safety. Of course this could in turn put thousands of people out of work. In addition to the health safety benefits there is also a huge economic incentive behind artificial intelligence, with estimates ranging up to an additional trillion-dollar increase to the economy per year.

Is Singularity Possible?

The creation of singularity would mean that a machine could be equal to a human being in all respects. It would be able to do the same things humans can in addition to having a degree of consciousness. Present day computers have the ability to reason to an extent but still lack outside knowledge of the real world. Yann Lecun of Center for Data Science at NYU says that this concept of super-intelligence could eventually be possible.

Lecun suggests that it would be difficult to replicate human intelligence in machines because the human brain is very complex and the real world carries many variables. However, the comparison can be made between a bird and an airplane. A plane doesn’t flap its wings, and it doesn’t have feathers but it flies. If this same principle can be applied to the dynamics of intelligence, singularity may be possible.

It’s fair to say that if singularity eventually comes to fruition that the product will be humanistic. Yet, our imagination of it could be something entirely different from both humans and machines. As long as the proper precautions are taken and we maintain a clear understanding of artificial intelligence capabilities, singularity shouldn’t pose a risk. But then again, only time will tell.

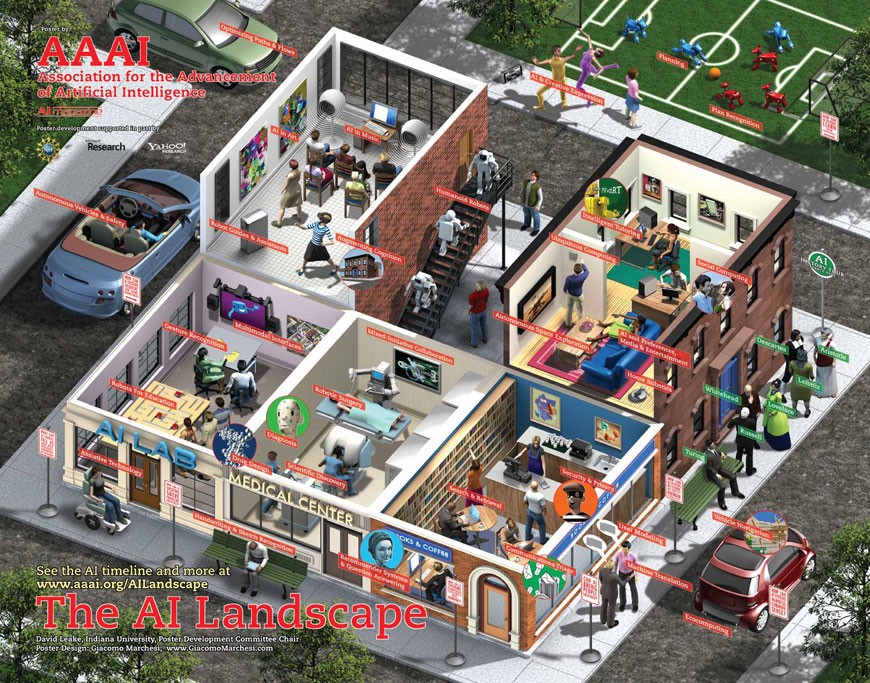

Infographic provided by the Association of Artificial Intelligence Advancement.